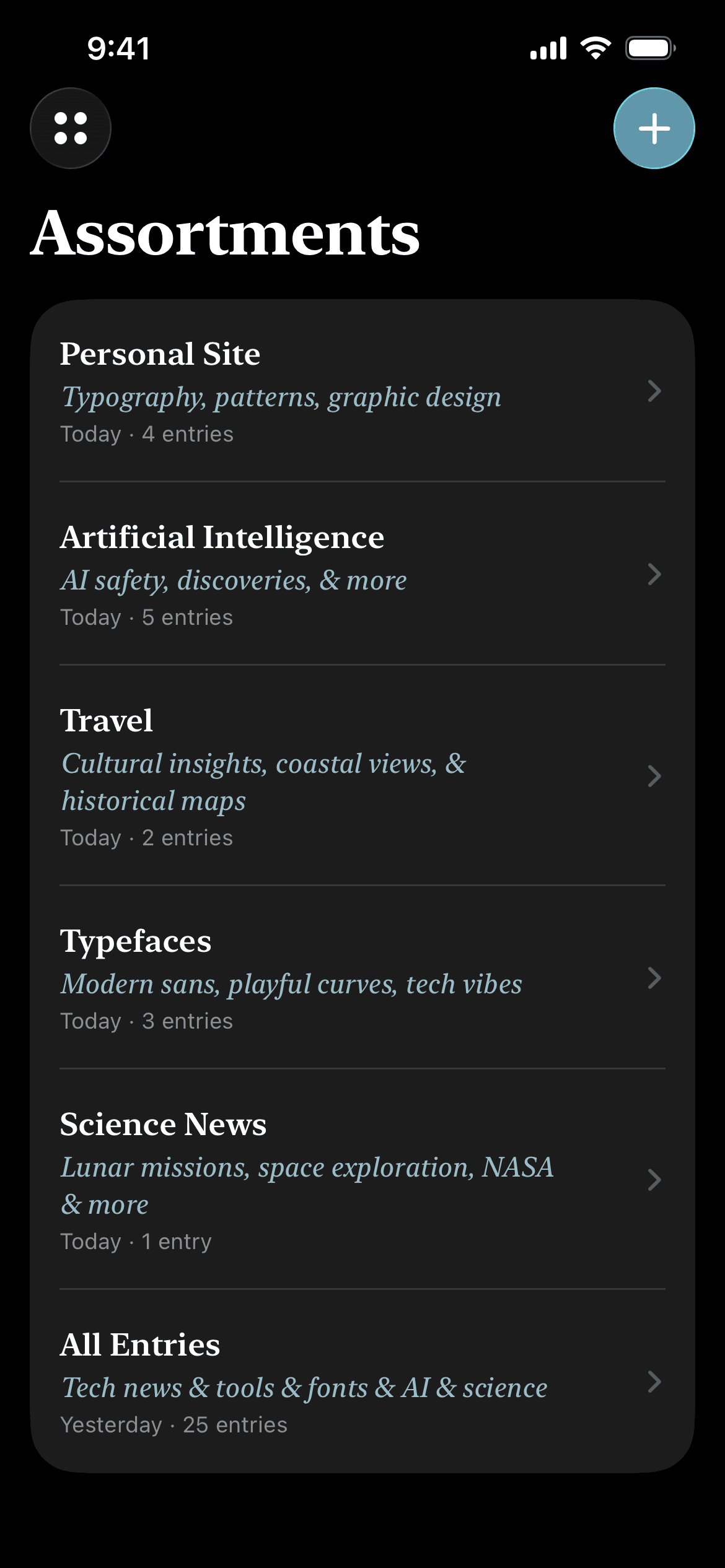

Larger and larger frontier models tend to get the overwhelming majority of our collective attention. I wanted to explore what current on-device models might be capable of, and what their peculiarities meant for developing applications with them. To that end, I built a simple bookmark manager called Assortments.

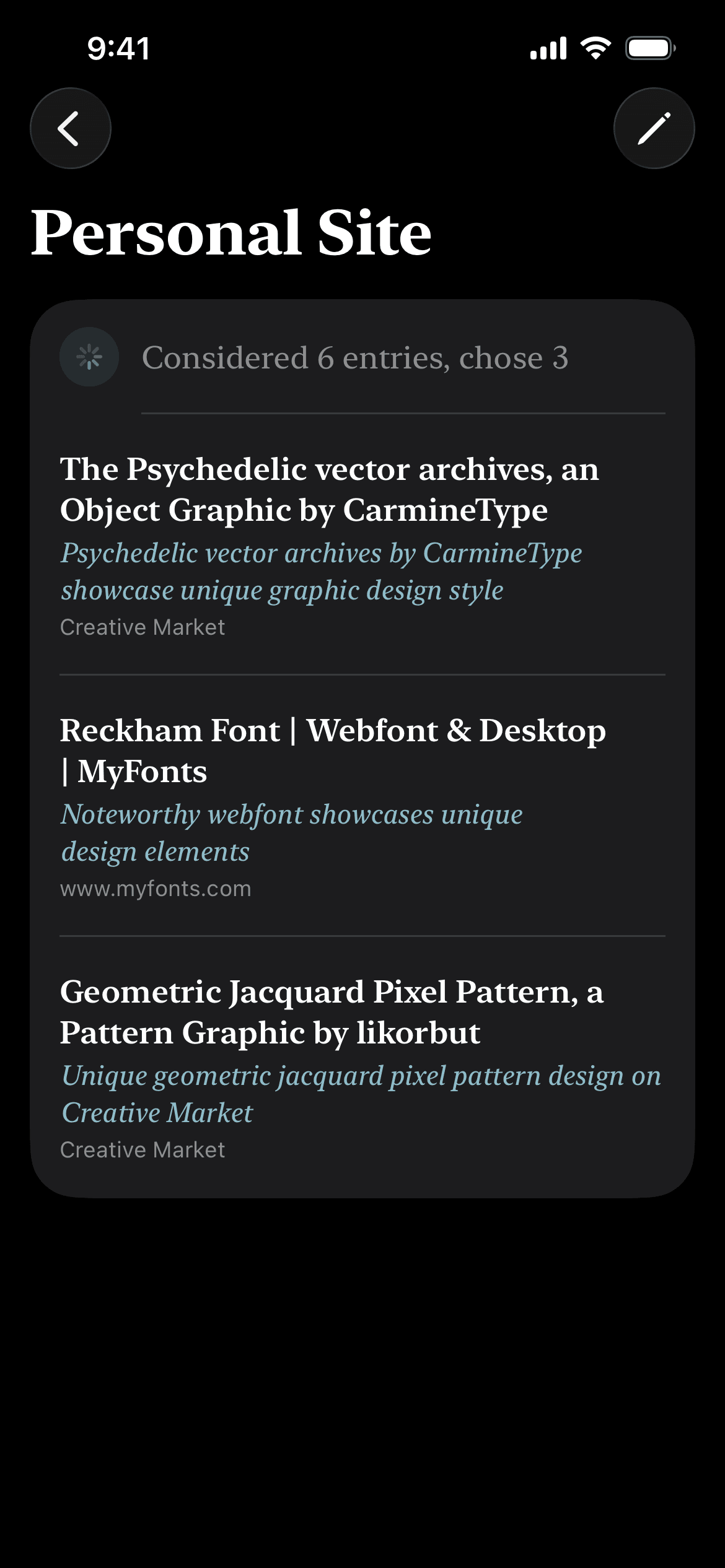

Individual bookmark entries are grouped together into user-defined collections. So far, pretty typical. What makes it odd is that you don’t decide which assortments an entry is included in; instead, you provide a natural language description of what you want included in — or excluded from — an assortment, and bookmarks get sorted automatically.

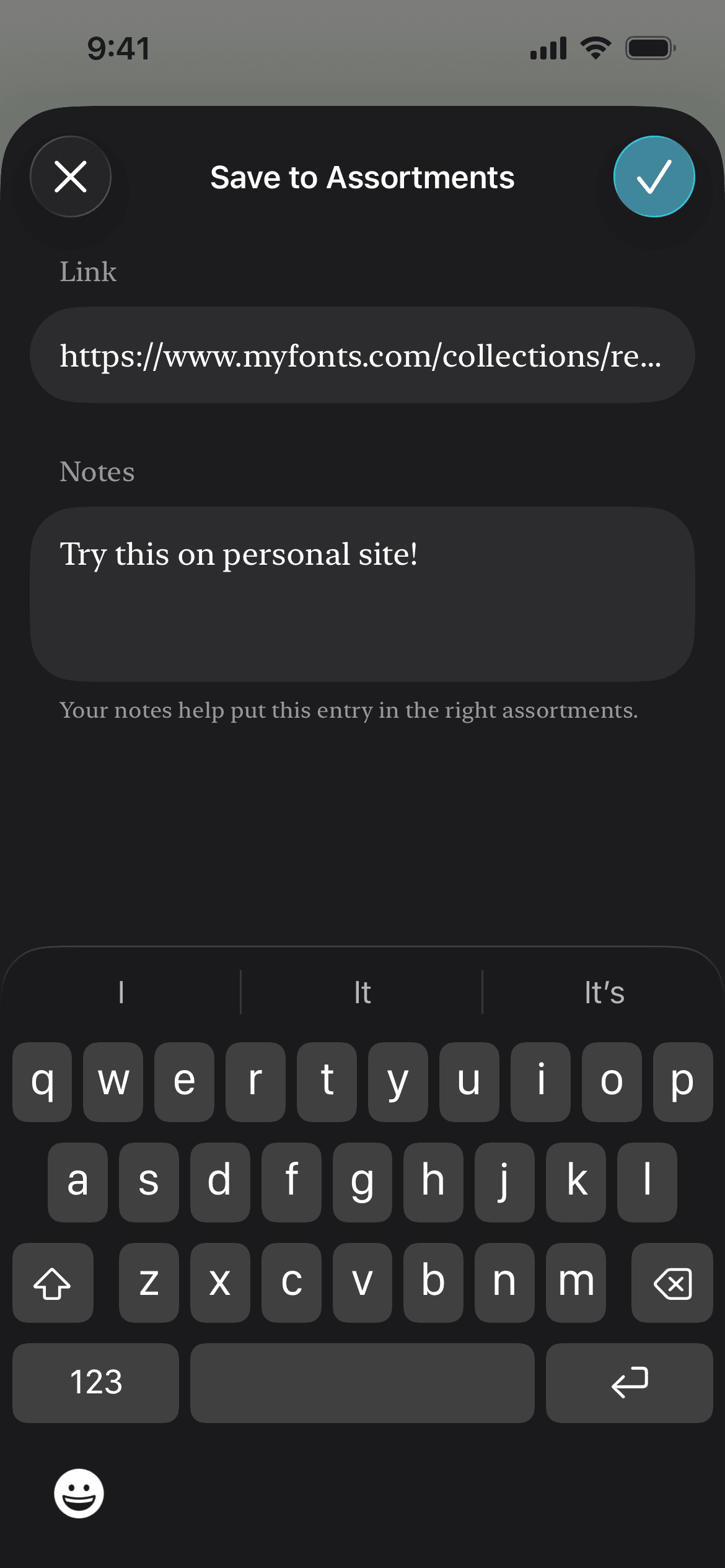

Bookmarks can be saved from within the app or the iOS share menu. When saving a bookmark, you can also include notes to help it get sorted to the right place.

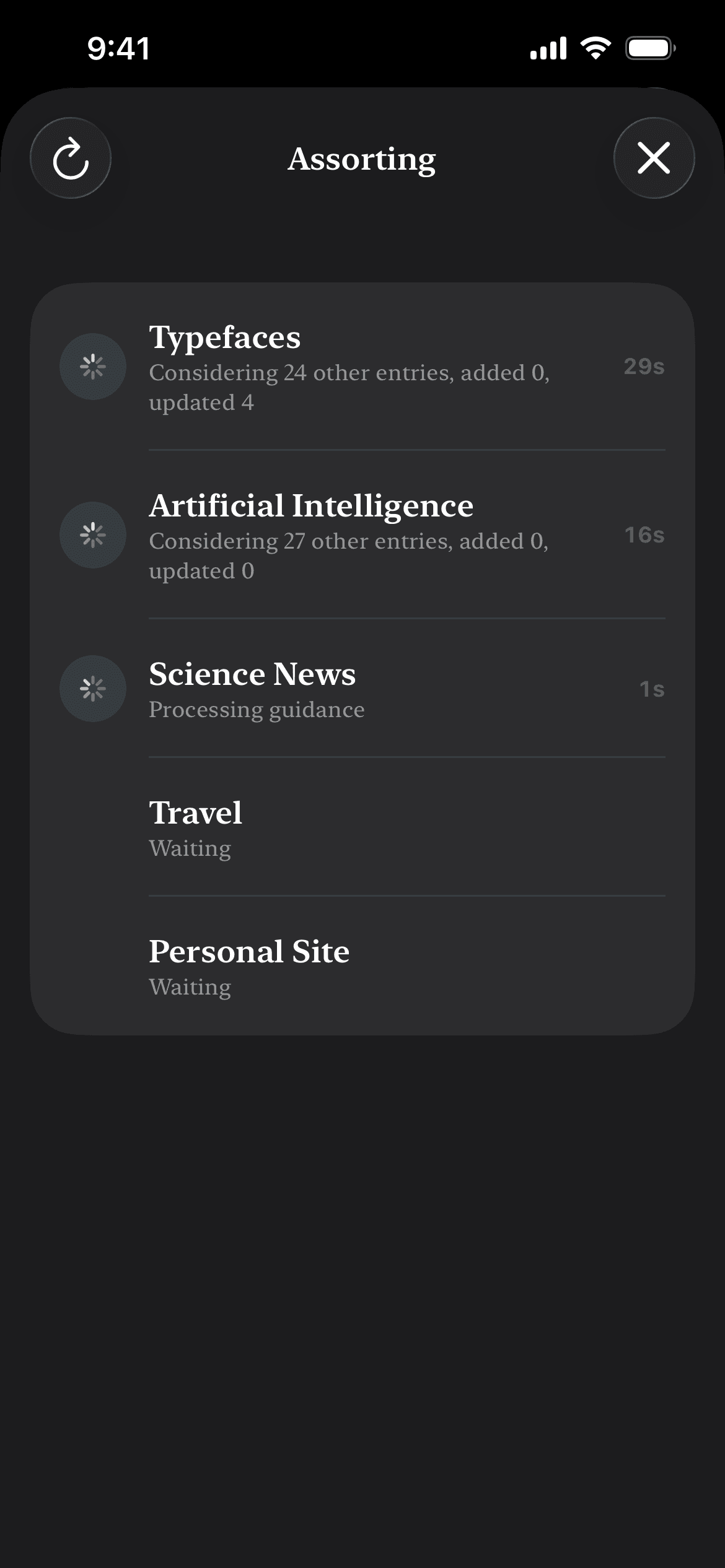

I chose this app concept as a testing ground for local models because I knew that the generative processing could be broken down cleanly into small, focused operations — an approach inspired by Maggie Appleton’s classic description of LLMs as “tiny reasoning engines”. Internally, the application assesses every single bookmark against every single assortment, first generating a reason the bookmark might or might not belong, then resolving that reason to a boolean. This creates an exponential explosion of operations, of course — but it also results in individual operations requiring limited context windows, and it allows for precisely modeling and displaying state. Indeed, state display was perhaps my favorite part of designing the app.

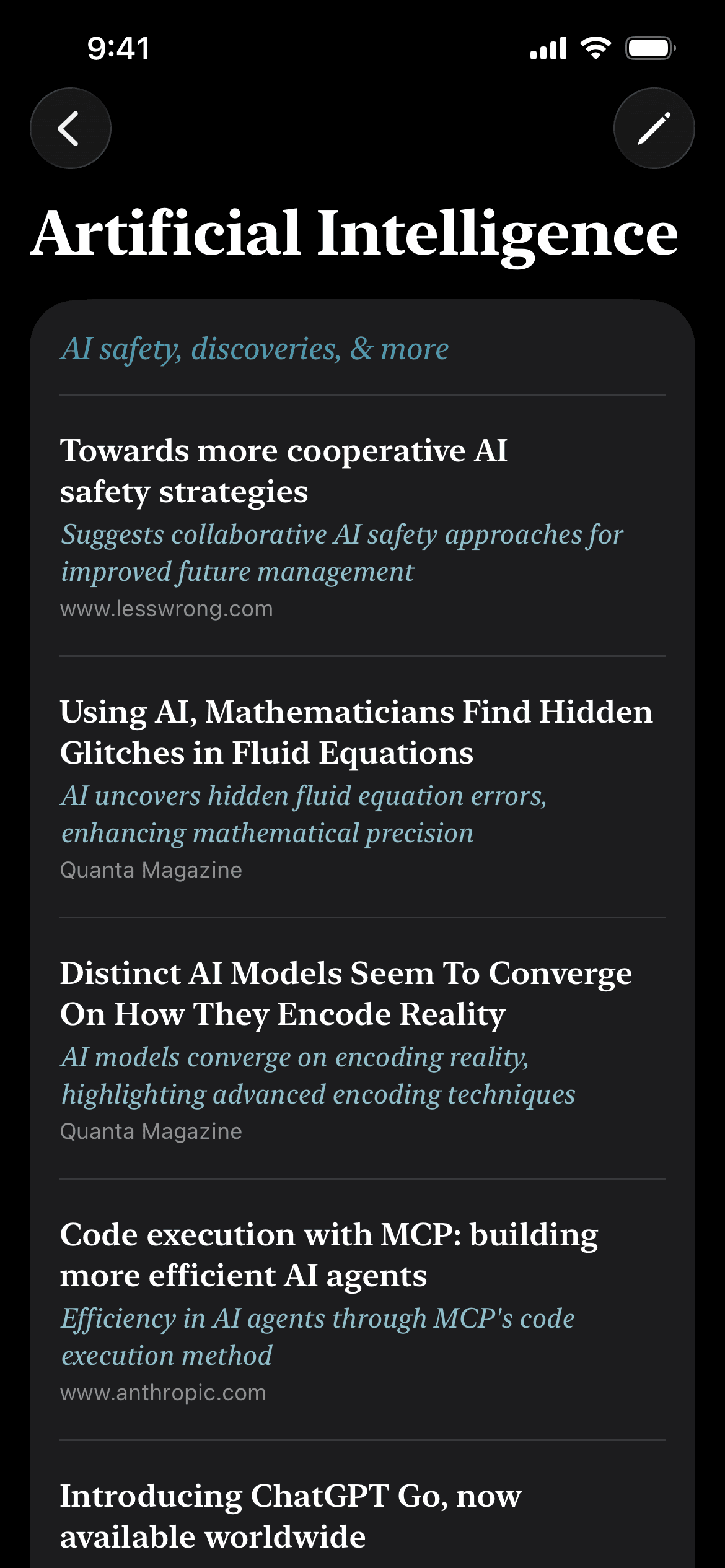

By design, the app doesn’t have the typical trappings of AI apps. There’s no first-person identity, no chat bubbles, no gradients or stars, not even really mentions of artificial intelligence. And yet, the majority of the text on the app’s main screens is generated. This posed some key design questions: Does generated text warrant special treatment? Does it matter where the text came from?

I decided to render generated text in italic, theme-colored type, which served double duty by also giving the app a bit more visual texture. Over time, though, I suspect we’ll feel less and less need to explicitly mark generated text, especially in low stakes contexts. In some sense, a generated preview blurb isn’t all that different from an entry count indicator — it’s a computed property, just using a newer type of computer.

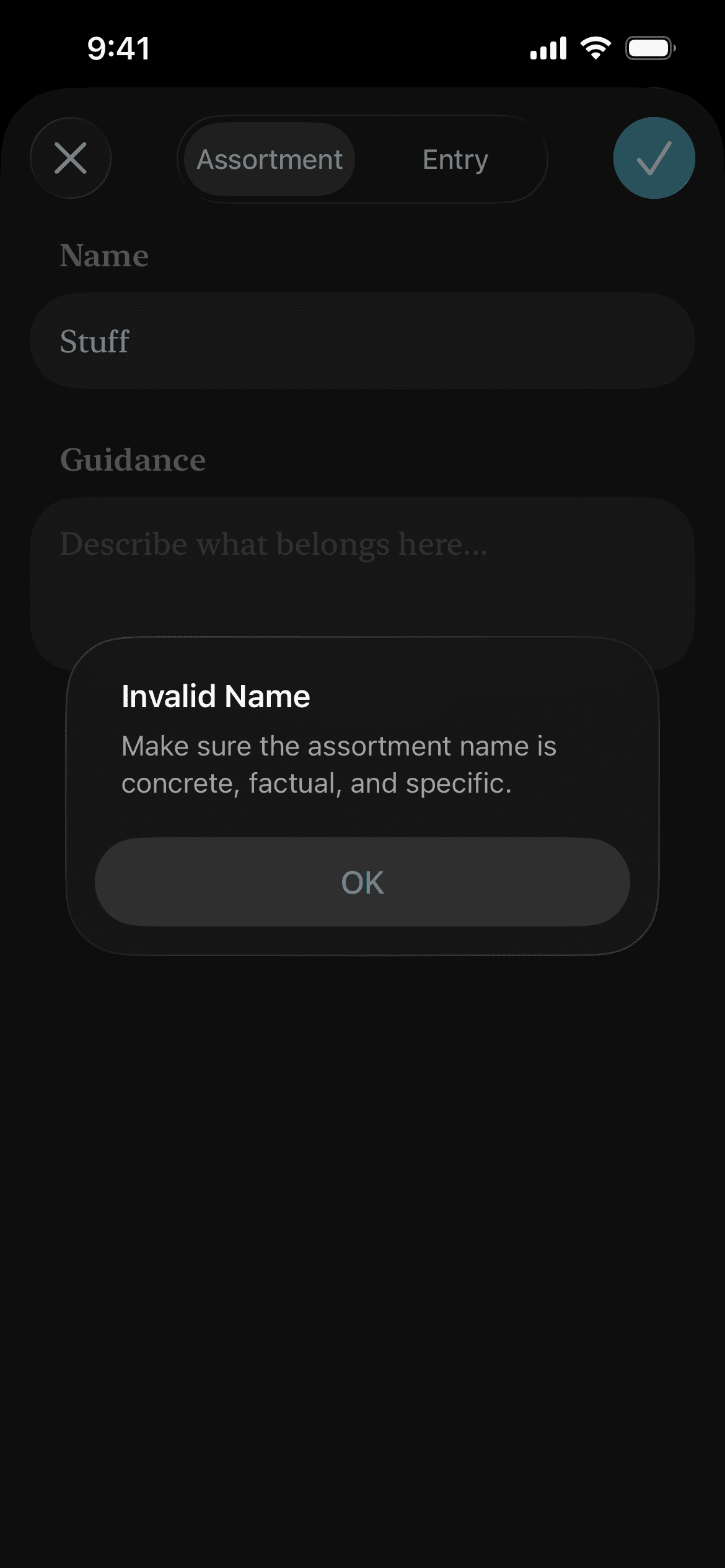

The app also includes model-based validation of user input, including for assortment names. This helps prevent attempting to sort based on unclear criteria (or potentially inconsiderate descriptors).

I admittedly didn’t pursue much automated testing for the app, but in the limited amount I did, I was struck by how odd quality assurance is for apps built with on-device models. Typically we think of using physical devices as the ultimate end-to-end tests: Hardware, software, network, battery, the whole shebang. But that’s not what I wanted — I actually wanted low-level component tests with just prompts, example data, and the model. I suspect the solution here may, amusingly, involve providing on-device models as a service for use during development. But for prototyping purposes, I just watched what the app did and winged it.

The enormous downside of local models is that they’re thousands of times less powerful than frontier models, but they do come with some significant upsides. A commonly cited benefit is the privacy they offer to end users, and this is indeed compelling. But from an app developer’s perspective, it’s also particularly wonderful that you have no services to run! There’s nothing to be on-call for, nothing that can go offline in the middle of the night. (If the model stops running… it’s probably because the phone ran out of batteries, and that’s not for you to fix.)

Of note, throughout this project, I had no idea what model I was actually using — did it have a name? A version? Was it specific to the particular model of iPhone? Somehow this alternated between being slightly unnerving and exceedingly natural. As long as it does what it needs to, I suppose it doesn’t matter?

My takeaway from this prototype is that, for constrained use cases, you can get a decent bit of mileage out of small models like what iOS devices provide. I don’t foresee them powering useful agentic functionality anytime soon (if ever), but the future isn’t solely agentic. As generative AI becomes commonplace and recedes into the background, I suspect local models will be useful for powering countless smaller details that make our day-to-day device usage just a bit smarter.